Apple previews the new AI-powered accessibility features coming with iOS 18

Apple will bring new AI-powered accessibility features to iPhone, iPad, Mac, CarPlay and Vision Pro with iOS 18 and other updates that will drop this fall.

iOS 18 and iPadOS 18 will bring more than a dozen new accessibility features, including Eye Tracking, Music Haptics, Vehicle Motion Cues, Hover Typing and more that will come to the iPhone, iPad, Mac, CarPlay and Vision Pro this fall.

Many seem to use artificial intelligence (AI), which should be the main underlying theme of iOS 18 and other Apple OS updates coming this fall. One exciting feature aims to prevent motion sickness when using an iPhone in a moving vehicle.

The new Apple accessibility features coming this fall

Apple previewed several new accessibility features ahead of Global Accessibility Awareness Day. The announcement in the Apple Newsroom confirms many of these features are based on artificial intelligence and machine learning, giving you a taste of what’s to come with iOS 18’s rumored AI capabilities.

Navigate an iPhone or iPad with your eyes, Vision Pro-style

This will let you navigate an iPhone or iPad with just your eyes. Taking learnings from the Vision Pro headset, Apple will allow you to navigate the iOS user interface and apps solely with your eyes. Eye tracking takes advantage of the front-facing camera and on-device machine learning.

Feel the music with haptic feedback

Music Haptics will use your Taptic Engine, the iPhone’s custom vibratory motor, to produce refined vibrations like taps and textures so that people who are hard of hearing have a way to sense music. Apple says Music Haptics works “across millions of songs” in the Apple Music catalog. Moreover, the company will provide an API for developers to “make music more accessible in their apps.”

A virtual trackpad that’s resizable

AssistiveTouch gets a virtual trackpad for navigating your iPhone or iPad by moving your finger across a small screen region. This virtual trackpad is resizable.

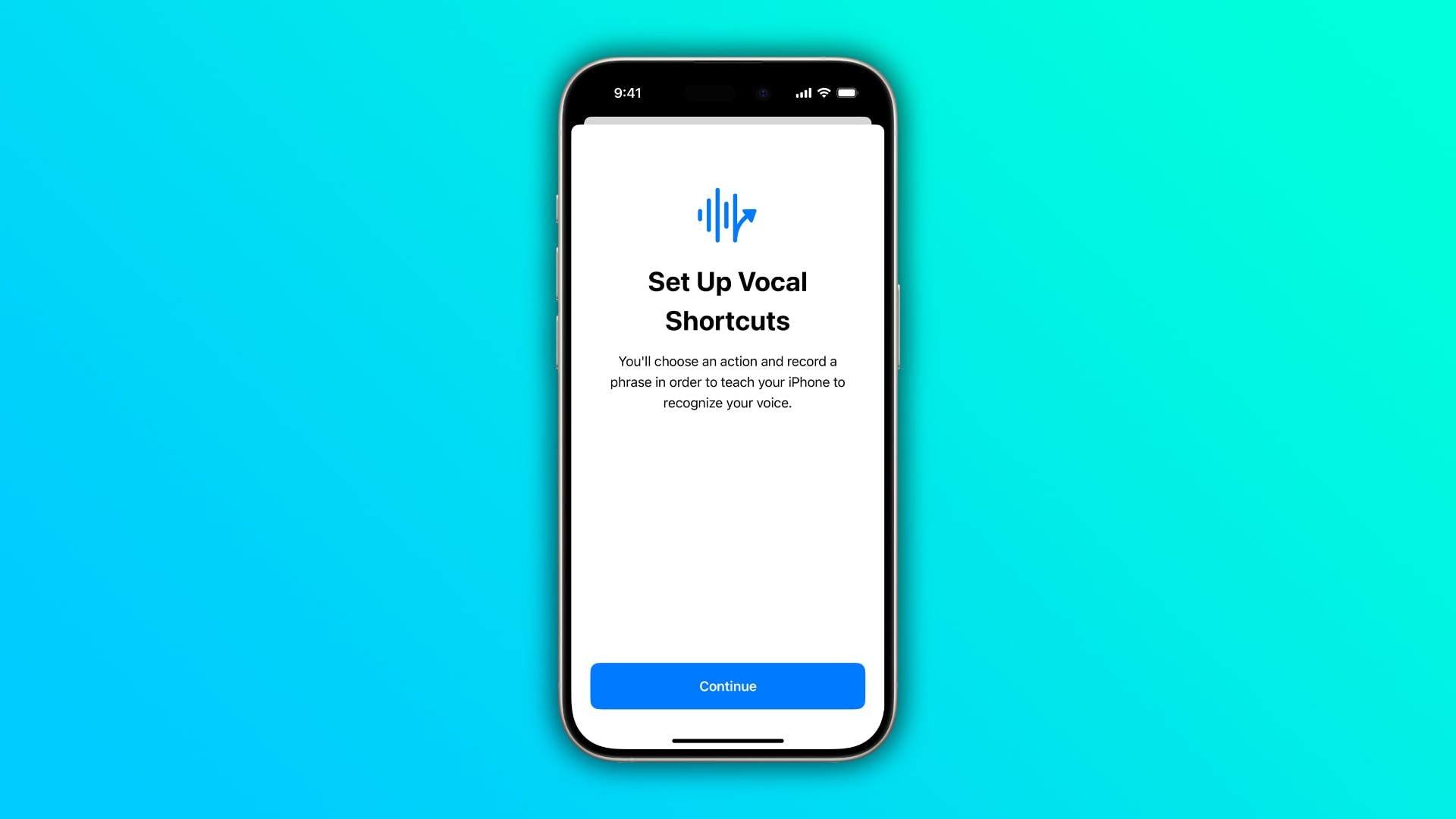

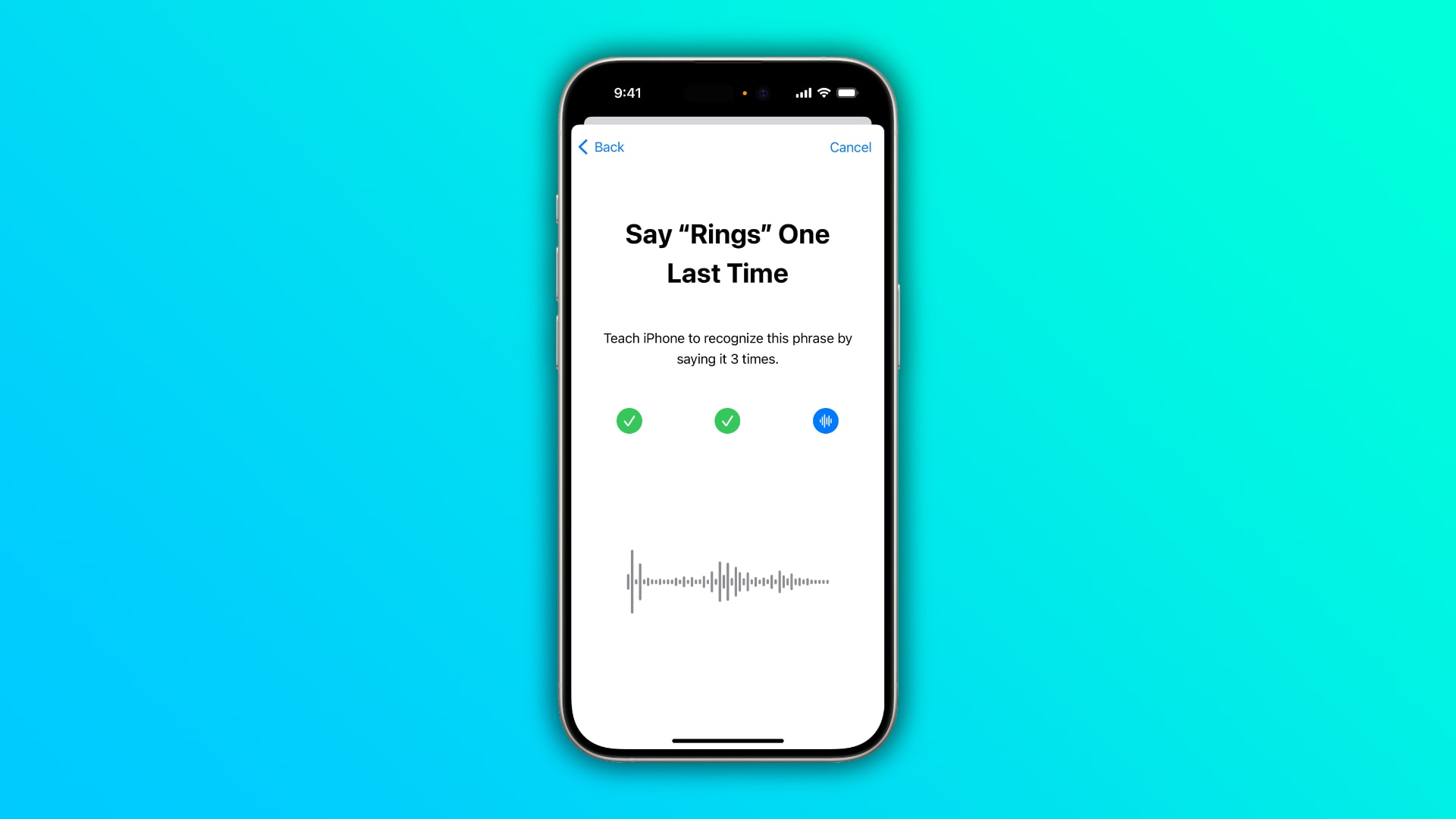

Custom Siri utterances for quick-launching shortcuts

Vocal Shortcuts is another new feature with potential. It lets you create custom utterances for Siri to launch shortcuts to complete complex tasks.

You’ll choose an action and record a custom spoken phrase three times to teach your iPhone to recognize it. This will let you trigger shortcuts and perform complex tasks by uttering an appropriate phrase.

You’ll choose an action and record a custom spoken phrase three times to teach your iPhone to recognize it. This will let you trigger shortcuts and perform complex tasks by uttering an appropriate phrase.

Hover Typing enlarges typeface in text fields

Don’t you hate when a webpage or app uses a tiny typeface for a text field that forces you to squint? With Hover Typing, an iPhone will detect when you’re interacting with a text field and show larger text above it when typing. This will work anywhere you can input text in fields, including chatting in Messages.

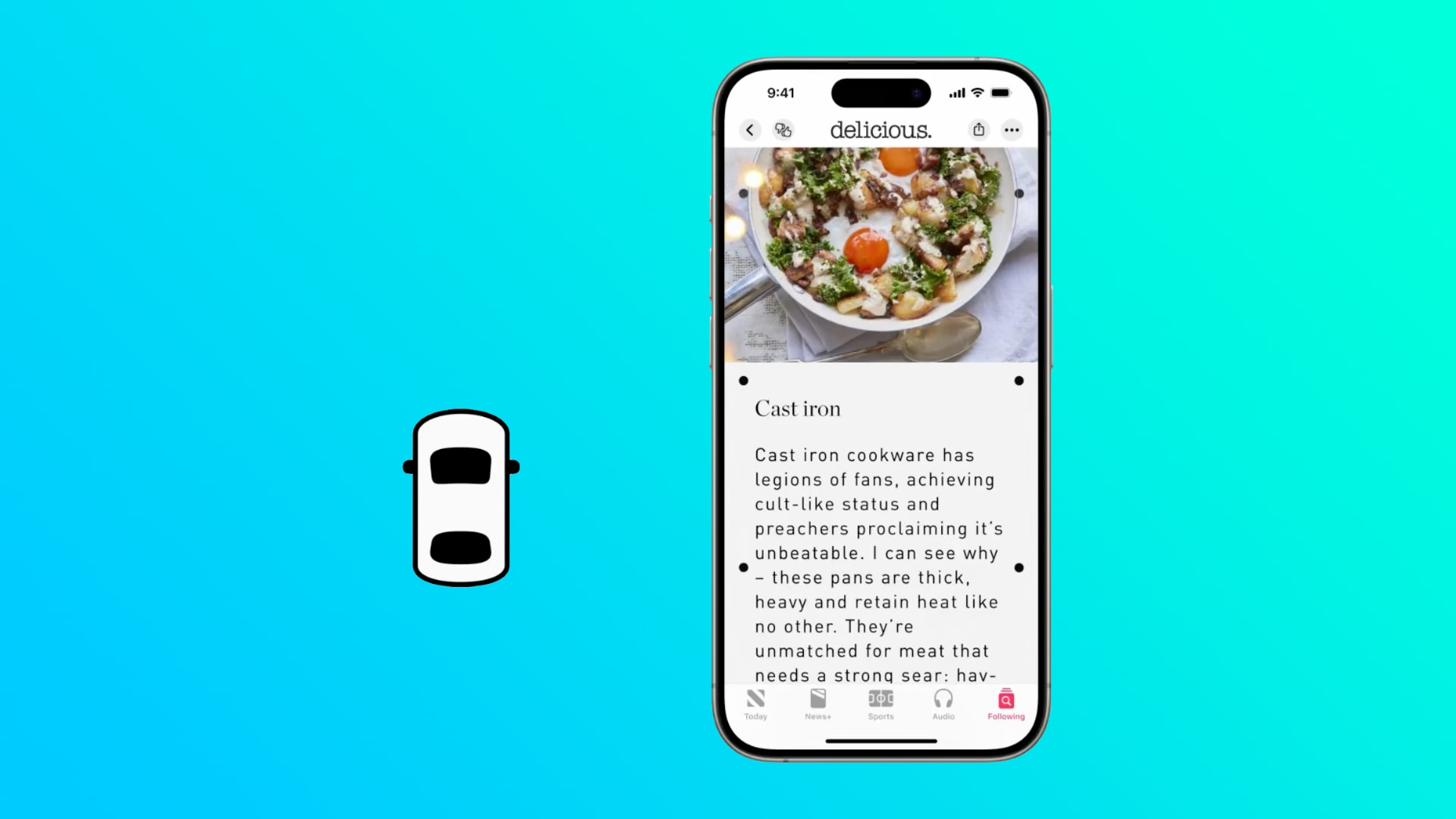

Prevent motion sickness when using an iPhone in a moving vehicle

Motion sickness is caused when your brain receives a conflicting sensory input where your eyes feel one thing, but your body feels another, like when using an iPhone (staring at static content) while driving in a vehicle (moving sensation).

Vehicle Motion Cues aims to alleviate this sensory conflict by animating dots on the screen edges according to changes in vehicle motion. The feature uses built-in sensors and can be set to automatically kick into action when it detects you’re in a moving vehicle, but you’ll be able to toggle it in the Control Center, too.

Vehicle Motion Cues aims to alleviate this sensory conflict by animating dots on the screen edges according to changes in vehicle motion. The feature uses built-in sensors and can be set to automatically kick into action when it detects you’re in a moving vehicle, but you’ll be able to toggle it in the Control Center, too.

CarPlay gaining Voice Control, Color Filters and Sound Recognition

Voice Control for CarPlay will let you use your voice to navigate the CarPlay interface and apps with your voice. The feature will support custom vocabularies and complex words. CarPlay will also pick up two existing accessibility features from the iPhone: Color Filters and Sound Recognition.

Color Filters uses bolder typeface and bigger font sizes to make the CarPlay interface visually more manageable to use. Sound Recognition will notify the user about significant traffic sounds like car horns and sirens.

Color Filters uses bolder typeface and bigger font sizes to make the CarPlay interface visually more manageable to use. Sound Recognition will notify the user about significant traffic sounds like car horns and sirens.

VoiceOver: Custom keyboard shortcuts and more

Mac users will be able to customize VoiceOver keyboard shortcuts. Also, VoiceOver will gain new voices, support for a flexible Voice Rotor and custom volume control.

Reader Mode and other Magnifier tidbits

Magnifier will bring a new feature called Reader Mode, but Apple hasn’t said what it’s for. It sounds like Reader Mode in Safari. Maybe this will present recognized text from your surroundings (like restaurant menus, signage, etc) in raw text form. The iPhone 15 and iPhone 15 Pro users will be able to quickly open Magnifier’s Detection Mode with a press of the Action button.

4 new accessibility features for Vision Pro

Apple hasn’t forgotten about visionOS. In the fall, visionOS 2 will gain four accessibility-related improvements. The existing Live Captions feature will be available across the system, not just on FaceTime.

“Apple Vision Pro will add the capability to move captions using the window bar during Apple Immersive Video, as well as support for additional Made for iPhone hearing devices and cochlear hearing processors,” Apple says.

“Apple Vision Pro will add the capability to move captions using the window bar during Apple Immersive Video, as well as support for additional Made for iPhone hearing devices and cochlear hearing processors,” Apple says.

Moreover, Vision Pro will get the Reduce Transparency, Smart Invert and Dim Flashing Lights features which have been available on other Apple devices but haven’t made the cut for the initial visionOS release.

Titdibts: Personal Voice upgrades, Listen for Atypical Speech

Braille users will get a new way to start and stay in Braille Screen Input for faster control and text editing. Speaking of which, support for the Japanese language is coming to Braille Screen Input and for multi-line braille to Dot Pad. There will also be the option to choose different input and output tables.

The Personal Voice will be available in Mandarin Chinese. FApple has changed the Personal Voice onboarding to use shortened phrases for folks who have difficulty pronouncing or reading full sentences. Live Speech will include categories and “simultaneous compatibility with Live Captions.”

The Personal Voice will be available in Mandarin Chinese. FApple has changed the Personal Voice onboarding to use shortened phrases for folks who have difficulty pronouncing or reading full sentences. Live Speech will include categories and “simultaneous compatibility with Live Captions.”

Voice Control will support custom vocabularies and complex words, and Switch Control will use an iPhone’s camera to detect finger-tap gestures as switches.

Lastly, a feature called Listen for Atypical Speech will boost speech recognition by using on-device machine learning to recognize your speech patterns. Apple says this feature enhances speech recognition “for a wider range of speech.”

Powered by AI, of course!

I thought it interesting that many of the above features make heavy use of artificial intelligence and machine learning. I counted: the Apple Newsroom announcement mentions “artificial intelligence” and “machine learning” three times each.

This is just a glimpse of other AI-powered features thought to come to iOS 18 and Apple’s other software platforms in the fall, especially Siri improvements based on on-device large language models and generative AI capabilities.

Source link: https://www.idownloadblog.com/2024/05/15/apple-previews-ios-18-accessibility-features/

Leave a Reply