Visual intelligence gets a boost in iOS 26

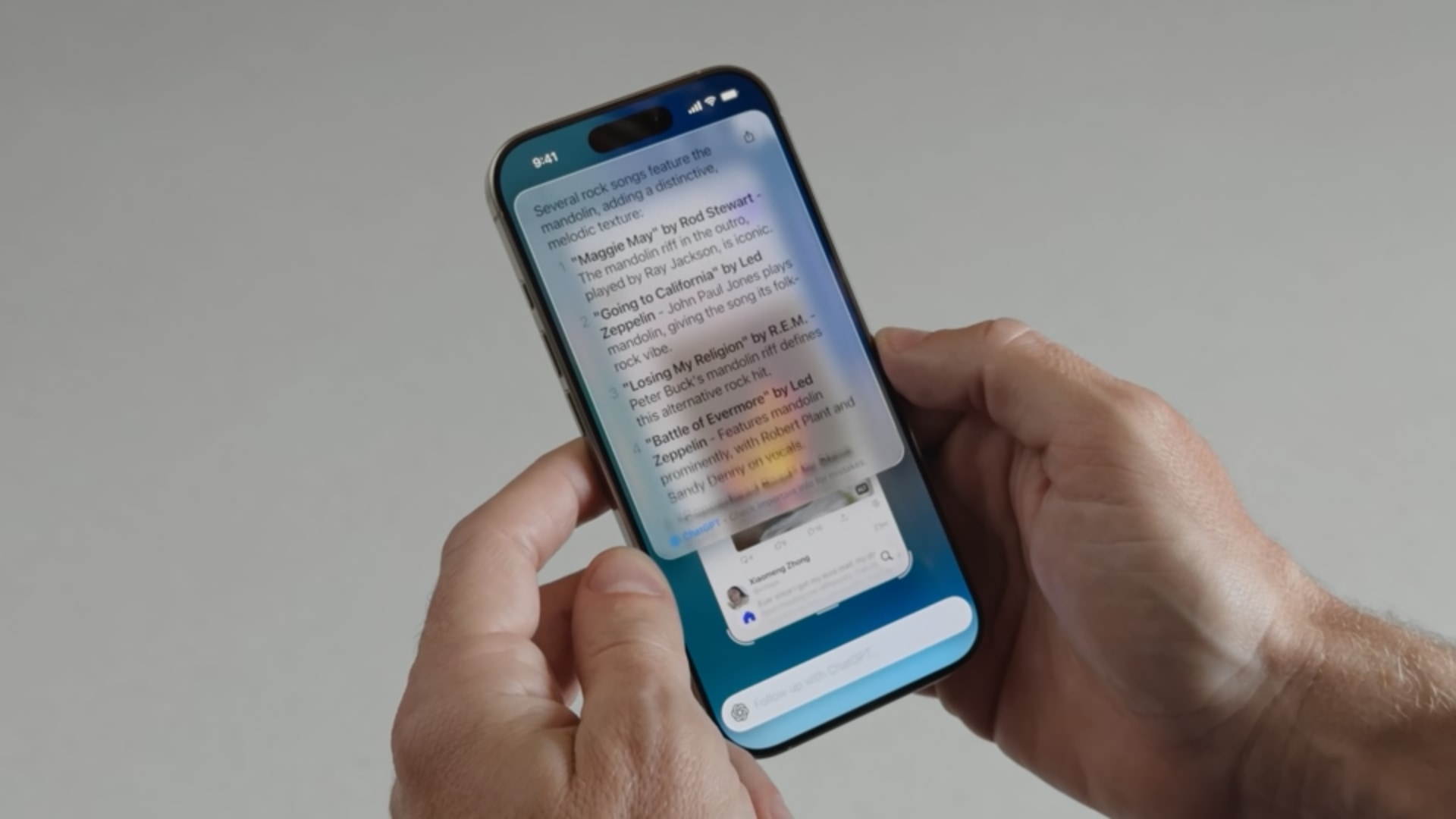

In iOS 26, the visual intelligence feature extends to the installed iPhone apps, letting you learn more about anything you see in apps simply by taking a screenshot.

An Apple executive showed how an improved visual intelligence feature of Apple Intelligence works on iOS 26 by opening a social media app on an iPhone, scrolling through various posts. He then stumbled upon a post with a guy wearing a nice jacket. To learn more about it, he simply took a screenshot like you normally would.

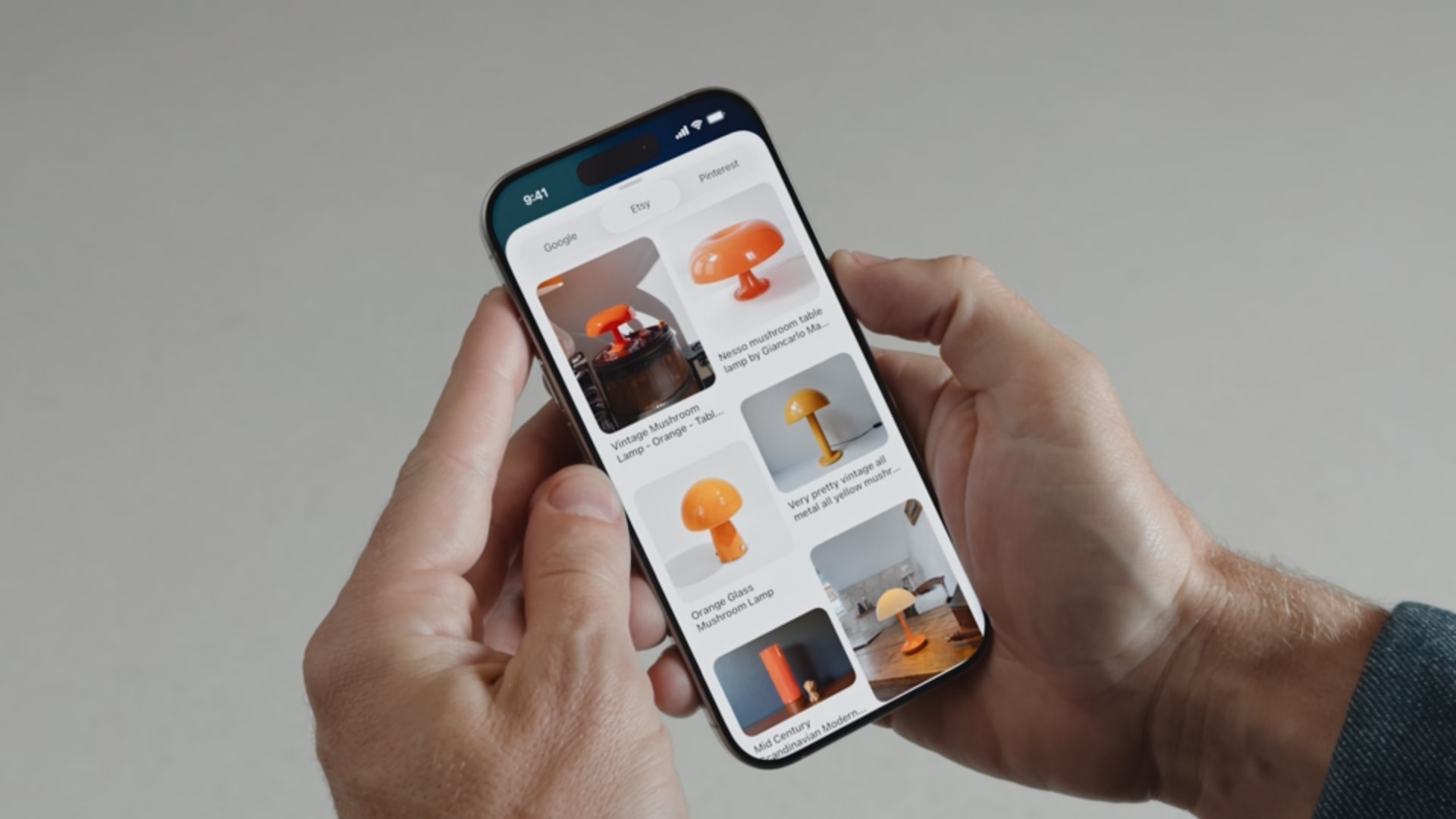

This produced a new button in the bottom-right corner, in addition to the screenshot thumbnail in the bottom-left corner. Visual intelligence went to work, using Google’s image search (as it usually does) to find some matching results on the web.

Visual intelligence gets a boost in iOS 26

You can also formulate a more complex question about anything you’ve screenshotted by letting you pull up the prompt interface, where you can write your query for ChatGPT to resolve.

Apple Intelligence also lets you add events from real-world flyers and posters, and now this also works for in-app content. Just screenshot an app, and if AI detects an event in in-app text or images, you’ll see an option to add it to your calendar.

Lastly, visual intelligence extends the systemwide search feature to apps, so you can find content across your installed apps. Apple has also previewed redesigned user interfaces across its software platforms, dubbed Liquid Glass. For further information, keep your eye on the Apple Newsroom and apple.com/ios.

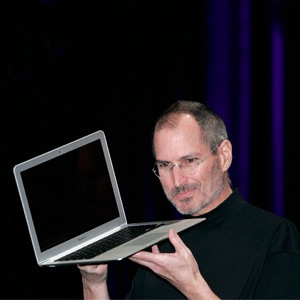

Apple Intelligence: A progress report

Apple also provided a progress update for Apple Intelligence, and (as expected), there’s nothing to talk about because the wait for an AI-infused Siri will continue into the next year. While Apple Intelligence isn’t the star of this year’s WWDC, Apple Intelligence in iOS 26 and other updates will get some love.

There will be more languages as Apple Intelligence expands to other parts of the world. Apple Intelligence also now uses more capable AI models that are also more efficient, potentially meaning they’ll run faster and consume less battery.

In addition, the existing Apple Intelligence features are now available throughout more parts of the system, and Apple is opening up access to the same on-device large language models it uses via a new developer framework, called Foundation Models. Now, developers of third-party apps can tap into the same AI models running on-device that Apple uses to deliver their own AI features.

There are also minor Genmoji improvements, like the ability to create a new custom emoji by combining two existing emoji characters, via your voice or a written prompt. Image Playground is now available in more places, and there’s a new oil painting drawing style.

Source link: https://www.idownloadblog.com/2025/06/09/apple-wwdc25-ios-26-visual-intelligence-new-features/

Leave a Reply