iOS 18.4 brings Apple Intelligence’s visual intelligence feature to the iPhone 15 Pros with four new ways to trigger it

iOS 18.4 brings Apple Intelligence’s Google Lens-like visual intelligence feature to the older iPhone 15 Pro and iPhone 15 Pro Max models with four new ways to trigger it.

Support for visual intelligence has been confirmed in the second beta of iOS 18.4, which Apple released for developer testing today. However, there are still a few more betas to go before the update launches in April.

Apple recently confirmed to John Gruber that visual intelligence would be coming to the iPhone 15 Pros “in a future software update.” Visual intelligence has been exclusively available on the iPhone 16 lineup, where it’s triggered by pressing and holding the new Camera Control capture button.

iOS 18.4 brings visual intelligence to the iPhone 15 Pros

The new iPhone 16e launched with support for visual intelligence even though it has no Camera Control, giving us a glimpse into how the feature would be invoked on older phones that can run Apple Intelligence, like the iPhone 15 Pro and iPhone 15 Pro Max: via the Action button or through the Control Center.

In iOS 18.4, you can go to Settings > Action Button and swipe to bind the “Visual Intelligence” option to the button. This lets you press and hold the Action Button no matter where you are in the system to instantly launch visual intelligence.

Or, you can go to the Control Center and touch and hold an empty slot until the icons start jiggling, then hit “Add a Widget” at the bottom and type “visual intelligence” into the search field in the widget gallery to find the new control. Now you can swipe down from the top-right corner of the screen and hit the control to launch visual intelligence without exiting the app you’re using.

5 ways to activate visual intelligence

As iOS 18 lets you replace the default camera or flashlight icons on the Lock Screen with any widget from the Control Center gallery, you can replace these default icons with a visual intelligence button.

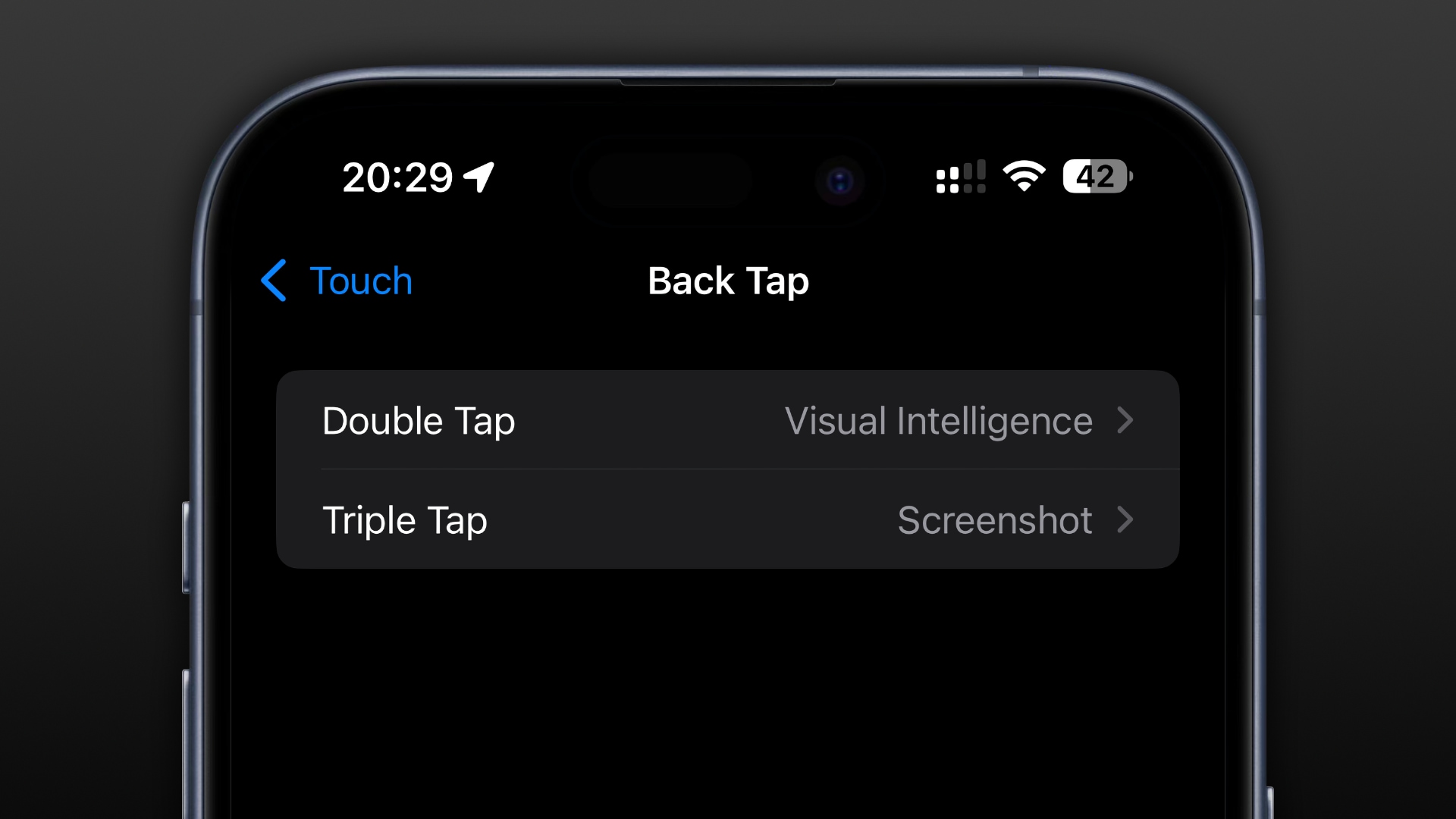

Let’s not forget about the under-appreciated Back Tap feature, which also supports these widgets. You can trigger visual intelligence by double or triple-tapping the back of your phone.

In other words, iOS 18.4 gives you five different ways to trigger visual intelligence, four of which are available on the iPhone 15 Pro and iPhone 16 lineups—the Action Button, the Control Center widget, a Lock Screen shortcut and a Back Tap gesture—while the Camera Control trigger requires an iPhone 16e, iPhone 16, iPhone 16 Plus, iPhone 16 Pro or iPhone 16 Pro Max.

How visual intelligence works

Visual intelligence is a Google Lens-like feature that lets you learn more about the world around you by pointing the iPhone camera at people, animals, plants, insects or objects like restaurants or businesses. You can also snap an image and send it to ChatGPT for analysis or to Google to perform an image search.

Visual intelligence can perform various actions on the text in the viewfinder, like a concert poster or a restaurant menu. When such text is detected in the viewfinder, you get options to add an event to your calendar, send an email message, visit a website or call a phone number. You can also ask visual intelligence to summarize, translate or read the text aloud.

Source link: https://www.idownloadblog.com/2025/03/03/apple-ios-18-4-brings-visual-intelligence-iphone-15-pro-lineup/

Leave a Reply