Image Playground exhibits racial biases when generating AI images from prompts

Apple’s AI image generation model, Image Playground, suffers from clear racial and social biases because it struggles to identify skin tones and hair texture.

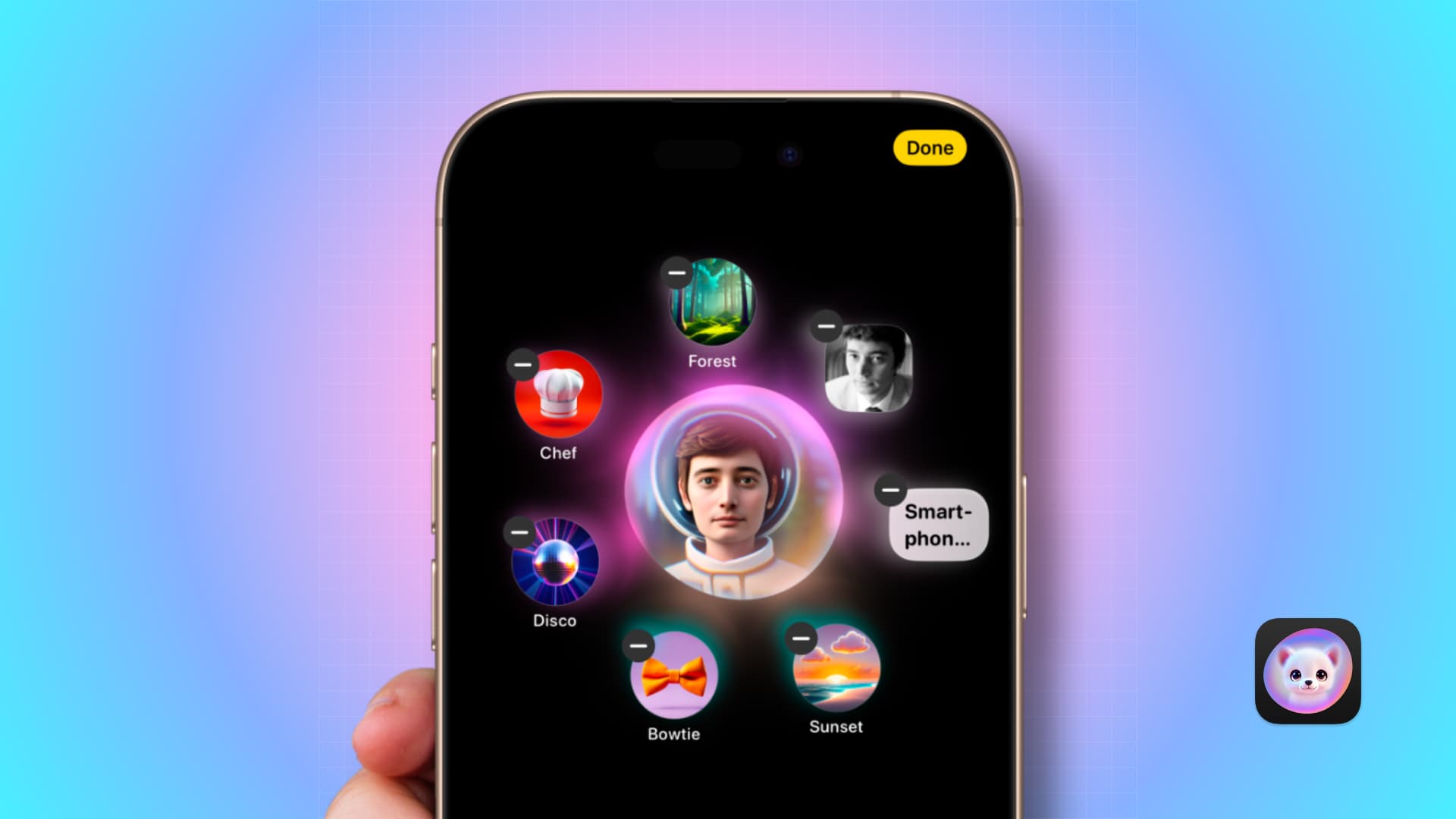

Image Playground is part of the Apple Intelligence suite of AI tools available on compatible iPhones, iPads and Macs. The feature only provides two drawing styles: Animation or Illustration. Furthermore, Image Playground can only create faces in cartoony styles to minimize any biases. Nevertheless, the feature has been found to exhibit racial issues when creating images of people from prompts.

Machine learning scientist Jochem Gietema tested the Image Playground and found that it struggles to properly identify skin tone and hair texture. He discovered this by feeding the feature his face shot as a starting point, then using a series of prompts to try to influence the skin tone of the resulting image.

Apple’s cartoony image generator has some bias issues

“In some images,” Gietema writes on his blog, “my skin tone gets significantly darker, and my hair changes quite a bit as if Image Playground doesn’t know what facial features are present in the image.”

Image Playground produced stylized images of his face with whiter tones when prompted with adjectives such as “affluent, rich, successful, prosperous and opulent.” It used darker skin tones for prompts containing the adjectives “poor, impoverished, needy, indigent, penniless, destitute and disadvantaged.”

He found similar racial-driven biases with prompts focused on sports, jobs, music, dance, and clothing style. Unfortunately, Apple hasn’t open-sourced Image Playground’s AI model so researchers could examine it more closely.

Image Playground cannot be used for deepfakes

Apple hasn’t brought a photo-realistic style to Image Playground because it didn’t want the tool to create deepfakes that could hurt its public image and tarnish its brand. Other guardrails are in place. For example, you cannot prompt Image Playground with negative words or upload pictures of celebrities.

All AI image generators are prone to racial biases. Even the biggest ones like Stability AI’s Stable Diffusion and OpenAI’s DALL·E are prone to common stereotypes. As in, researchers found that both tools associate the word “American” with wealth, the word “Africa” with poverty and the word “poor” with dark skin tones.

AI image generators tend to give racist and sexist results

Racial bias isn’t intentionally programmed into AI image generators. Instead, racial and social bias is caused by a lack of diversity in the models’ training datasets. However, it’s unclear whether prompt engineering could be used to counteract biases in data sets and improve the results. Improving the data sets themselves to reduce racial bias might be a better way to tackle this problem.

We’re only in the first stages of the AI revolution. Much of this is uncharted territory, with AI companies such as OpenAI, Google, Microsoft, Apple, and others constantly learning from their mistakes and making adjustments. I’m convinced racial biases won’t be an issue for AI image generation a few years from now.

Source link: https://www.idownloadblog.com/2025/02/18/image-playground-racial-bias-issue/

Leave a Reply