Apple enables developers to detect human body and hand poses for screenless app interactions

Apple has added new capabilities to its Vision framework in iOS 14, iPadOS 14, tvOS 14 and macOS 11.0 Big Slur which enable developers to support cool screenless app interactions.

Enabling hand gestures in apps

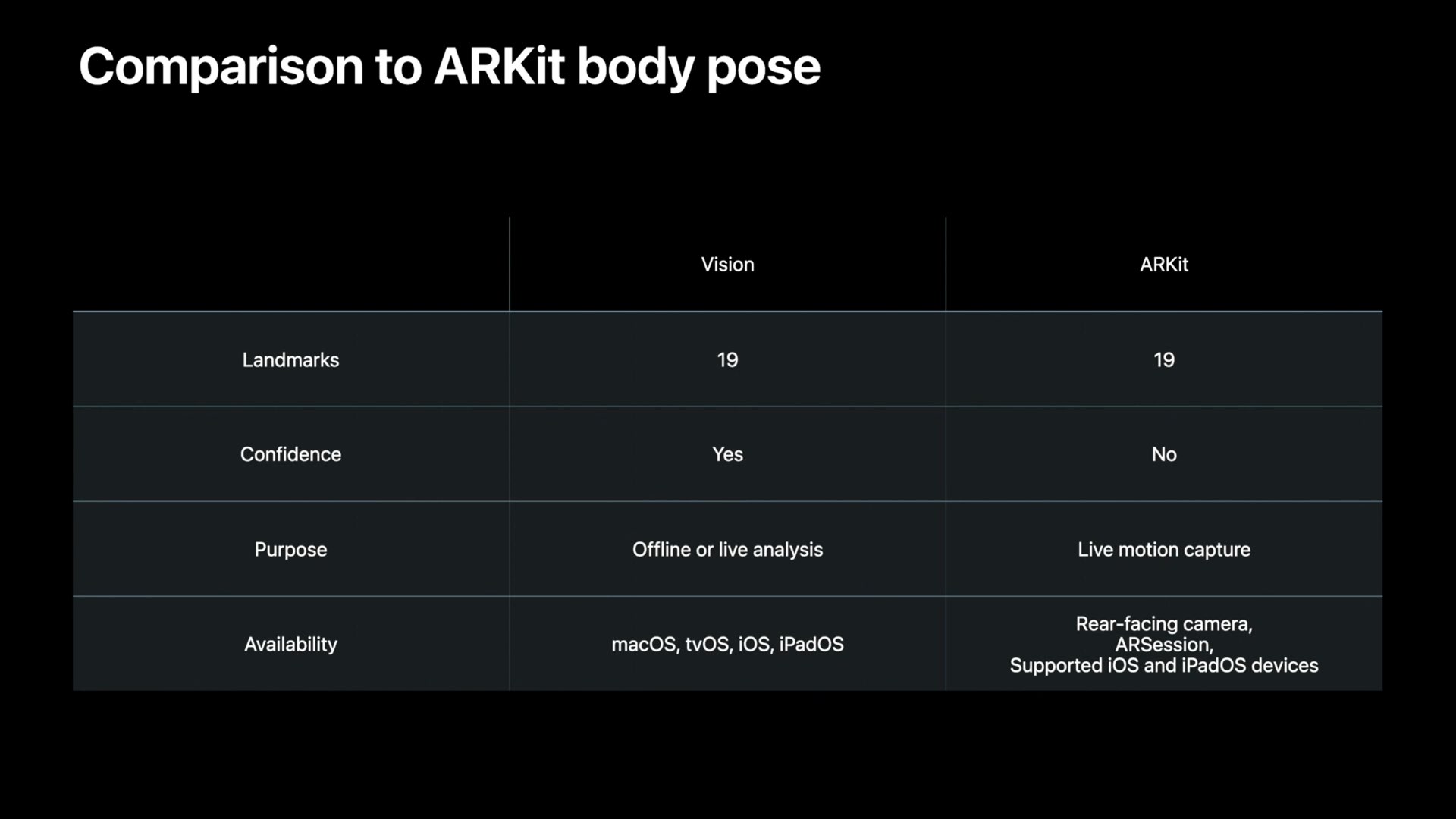

As explained in Apple’s WWDC 2020 session titled “Detect Body and Hand Pose with Vision”, the updated Vision framework in iOS/iPadOS 14, tvOS 14 and macOS 11 takes advantage of new computer vision algorithms to perform a variety of tasks on input images and video.

Aside from prior features like face and face landmark detection, text detection, barcode recognition, image registration, general feature tracking and object classification/detection, the framework now supports detecting body and hand poses in photos and video.

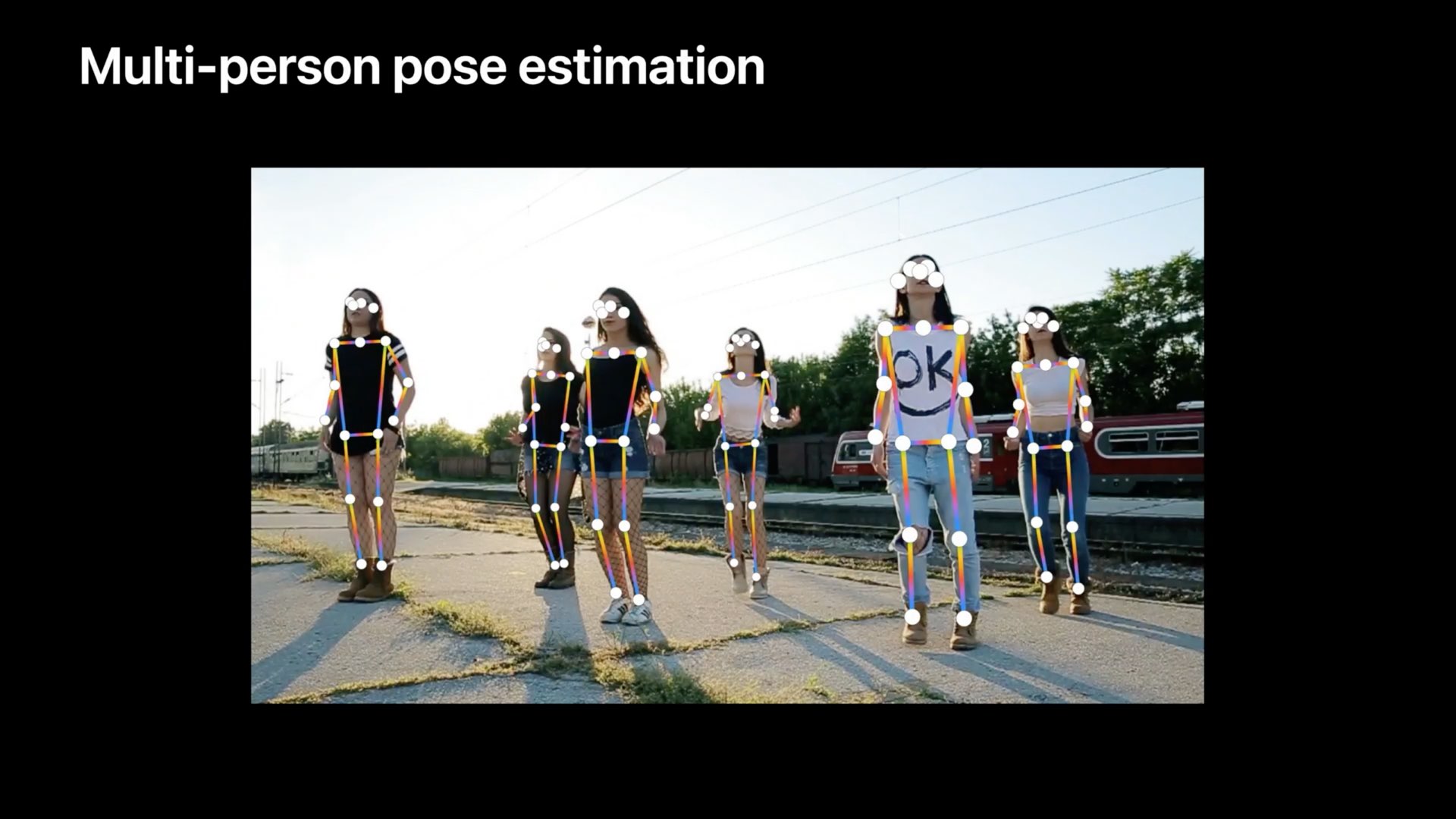

This lets apps analyze the gestures, movements and poses performed by human bodies and hands. It’s not limited to static images as the refreshed framework is able to perform this kind of demanding analysis on live video feed coming from the camera built into the user’s device.

Why you should care

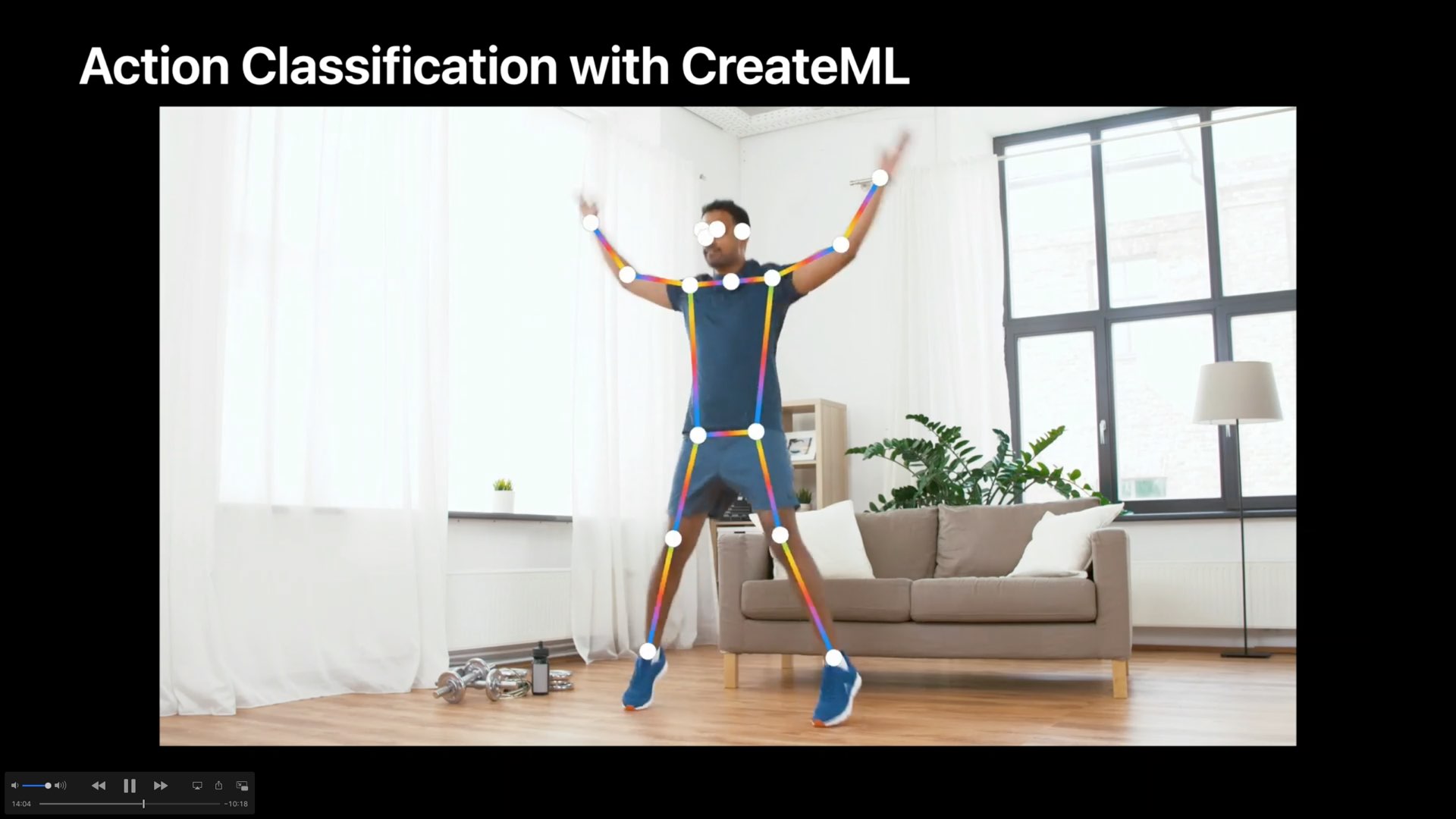

By analyzing the poses, movements and gestures of people, apps taking advantage of the framework can offer a variety of cool new features not possible before. The provided examples include a fitness app that could intelligently classify your body moves.

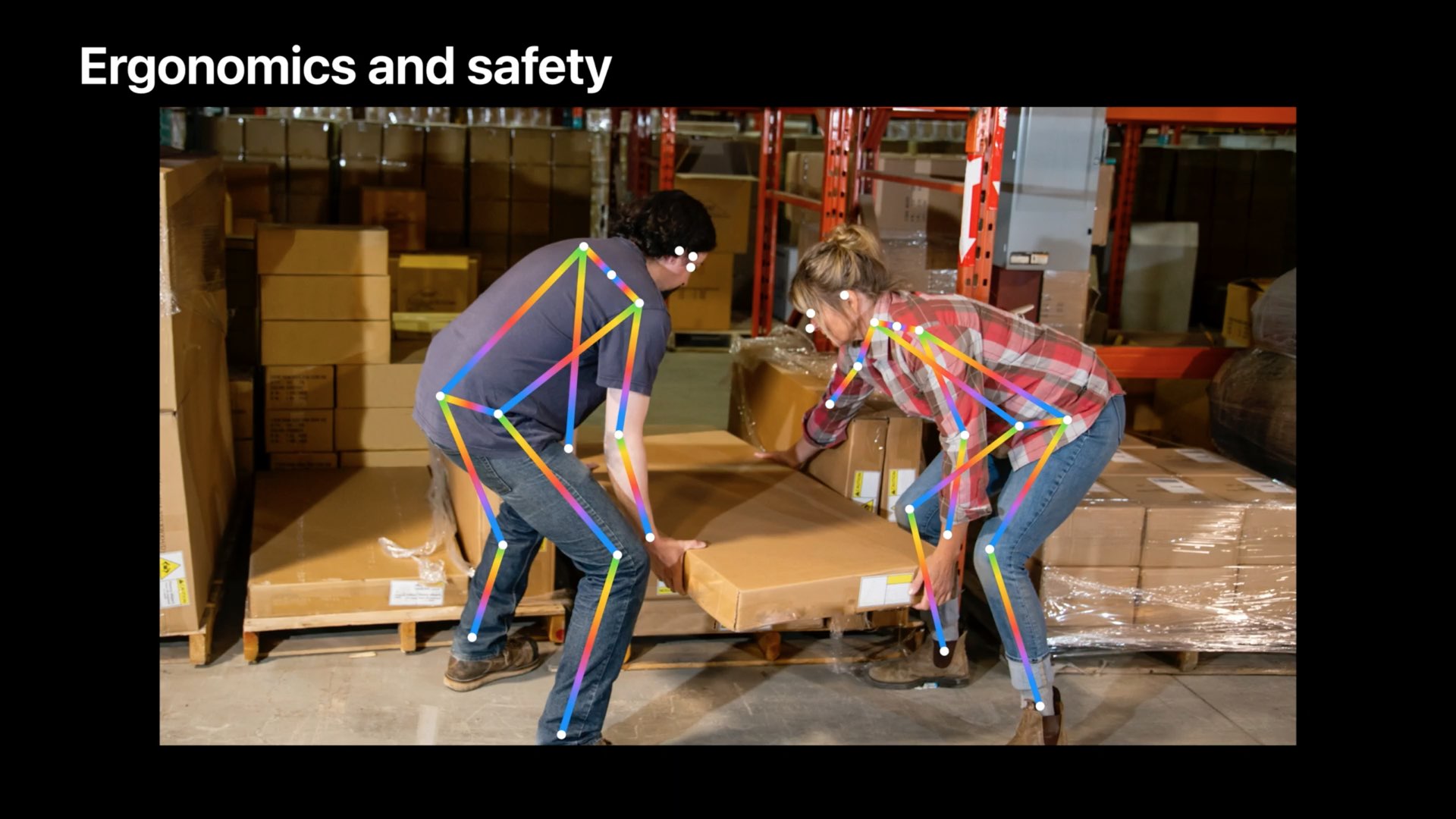

Or, a safety app that could help train employees on correct ergonomic postures.

Another example mentioned in Apple’s developer session includes a video editing app that could leverage the framework to find videos based on pose similarity. Importantly, hand pose analyst enables developers to bring gesture recognition into their apps.

Triggering camera capture with a specific hand gesture

Hand gesture support makes possible a whole new form of screenless app interactions, such as “drawing”in an app by moving your finger through the air, without touching the display at all. Other examples include switching between app modes with ease, matching emoji to the specific gestures, triggering the camera shutter with a unique hand gesture and much more.

Matching hand gestures to their corresponding emoji symbols

Areas needing improvement

Apple’s session video mentions that the aforementioned features work well with the vast majority of common hand and body poses, but there are some notable exceptions.

Hand and body pose detection is available across iOS, iPadOS, tvOS and macOS

Users wearing gloves or overflowing clothing may throw off the framework. The same goes for detecting hand and body poses on individuals who may be bent over or partially obstructed.

Thoughts on hand gestures?

Do we need apps that support hand gestures, do you think?

Apple’s session video provides a great overview of a few specific types of apps that could benefit from these new features, but what about other apps?

And finally, do screenless app interactions make any sense to you?

Chime in with your thoughts in the commenting section down below!

Source link: https://www.idownloadblog.com/2020/06/25/wwdc-2020-apple-vision-framework-improvements/

Leave a Reply